Have you ever described an app idea in plain English and watched an AI bring it to life? That’s vibe coding—the revolutionary practice of using natural language prompts to build software. As a developer who has shipped multiple SaaS tools and 2D gaming apps using this method, I’ve tested the frontier of AI models to find the ultimate coding partner.

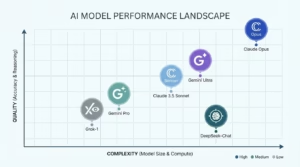

After extensive projects with Claude Opus 4.5, Claude Sonnet 4.5, Gemini 3 Pro, Gemini Flash, Grok 4.1/4.2, and DeepSeek R1, a clear winner emerged. This isn’t just about benchmark scores; it’s about which model consistently understands your vision, writes robust code, and turns complex prompts into working applications.

If you’re choosing an AI for your next vibe coding project, this hands-on, experience-driven comparison will save you time, money, and frustration. Let’s dive in.

Check which tool is best for writing here….

What is Vibe Coding? Beyond the Hype

Vibe coding is more than a buzzword. It’s a development paradigm where you focus on the high-level vision and user experience—the “vibe”—while an AI handles the implementation details. You provide loose, iterative directions like “add a secure user dashboard with analytics charts,” and the model generates the corresponding code, often across multiple files.

This approach is transformative for rapid prototyping, MVP development, and projects where speed-to-market is critical. It democratizes development, allowing founders, designers, and junior developers to translate ideas into tangible products. However, the quality of the output is inextricably linked to the capability of the underlying AI model.

My Vibe Coding Journey: From Concept to Shipped Products

My testing wasn’t academic. I built real, functional projects:

-

SaaS Tools: A customer analytics dashboard with real-time data visualizations, a project management tool with Kanban boards and user roles, and an internal admin panel for content moderation.

-

2D Gaming Apps: A Pac-Man-inspired arcade game with ghost AI, a physics-based puzzle game, and a browser-based multiplayer card game.

Each project involved hundreds of prompts, iterative debugging, and scaling from a single file to a full-stack application. This real-world stress test revealed the true strengths and weaknesses of each AI model.

The Contenders: A Lineup of AI Powerhouses

I put the following models through their paces, using their official APIs and interfaces:

-

Anthropic’s Suite: Claude Opus 4.5 (the flagship) and Claude Sonnet 4.5 (the balanced option).

-

Google’s Gemini Family: Gemini 3 Pro (the deep thinker) and Gemini 3 Flash (the speedster).

-

xAI’s Contender: Grok 4.1 & 4.2 (the context-savvy newcomer).

-

The Open-Source Challenger: DeepSeek R1 (the privacy-focused model).

Evaluation Criteria: What Makes a Great Vibe Coding AI?

I judged each model on the following axes, crucial for practical development:

-

Prompt Comprehension: Does it grasp nuanced intent or require constant clarification?

-

Code Quality & Architecture: Is the output production-ready, clean, and well-structured?

-

Reasoning & Iteration: Can it debug its own code and handle complex, multi-step tasks?

-

Context Management: How well does it maintain coherence across long coding sessions?

-

Frontend vs. Backend Strength: Is it better at UI generation or business logic?

Model-by-Model Breakdown: Strengths, Weaknesses, and Verdicts

1. Claude Opus 4.5: The Undisputed Champion

Verdict: Best for complex, production-grade SaaS and game logic.

Opus 4.5 wasn’t just good; it was in a league of its own for serious development. It excelled in architectural reasoning, often proposing scalable patterns I hadn’t explicitly requested. When building the analytics dashboard, it correctly implemented a modular service layer without being prompted. Its code was consistently clean, secure, and well-documented.

Benchmarks back this up: Claude Opus 4.5 leads in coding accuracy, achieving 80.9% on SWE-bench Verified. In practice, this meant far fewer bugs and more “it just works” moments. For game development, its logical precision was invaluable for implementing collision detection and ghost AI behavior. It’s the most reliable partner for projects where correctness is non-negotiable.

2. Claude Sonnet 4.5: The Cost-Effective Powerhouse

Verdict: The best value for money for most vibe coding tasks.

Sonnet 4.5 delivers perhaps 90% of Opus’s capability at a significantly lower cost. It’s incredibly efficient, producing high-quality code quickly. One review notes that Sonnet 4 “produces code that’s straight-out textbook material” and scores highly on benchmarks. For many SaaS MVPs and simpler games, Sonnet is the smartest choice, offering an outstanding balance of performance and price.

3. Gemini 3 Pro: The Frontend & “Vibe” Specialist

Verdict: Excellent for UI-heavy prototypes and creative frontend work.

Google markets Gemini 3 Pro as a top “vibe coding” model for generating rich, interactive interfaces. This held true in my tests. When prompted for a ” sleek, modern dashboard with a dark mode toggle and animated graphs,” Gemini 3 Pro produced remarkably polished HTML/CSS/JS, often with creative flourishes. It understands design language exceptionally well.

However, for backend logic and complex state management, it sometimes offered simplistic solutions or required more hand-holding than Claude. It’s a fantastic tool for frontend-focused prototyping.

4. Gemini Flash: The Speed Demon for Simple Tasks

Verdict: Good for quick, simple code snippets and ideation.

As a CNET experiment found, Flash is faster but often requires more manual, specific prompting compared to the more autonomous Gemini 3 Pro. In my work, Flash was useful for generating isolated components or functions rapidly. However, for orchestrating a full-stack feature, it lacked the deep reasoning and initiative of its Pro sibling or the Claude models. It’s a complementary tool, not a primary driver.

5. Grok 4.2: The Context-Aware Codebase Whisperer

Verdict: A strong niche player for extending and modifying existing codebases.

Grok shines in its ability to deeply understand an existing codebase. One developer praised Grok 4 for its “context saturation,” noting it “burrows into your files until it’s got the whole picture”. I found this to be accurate. When tasked with “add JWT authentication to this existing Flask app,” Grok 4.2 expertly located the correct files and inserted clean, appropriate code. It’s less opinionated and more literal in following instructions, which can be a virtue for precise modifications.

6. DeepSeek R1: The Open-Source Game Dev Contender

Verdict: A compelling option for game development, especially with hardware constraints.

DeepSeek R1 is a rising star, particularly in game development circles. Tutorials showcase its use for “vibe coding” complex games like Pac-Man, though it can struggle with consistent maze generation. Its major advantage is flexibility; it can run offline on capable hardware (like AMD MI300X GPUs), offering data privacy and cost control. For indie game devs or those with specific infrastructure needs, DeepSeek R1 is a formidable and cost-effective alternative.

Head-to-Head: SaaS Development Showdown

| Task | Claude Opus 4.5 | Gemini 3 Pro | Grok 4.2 |

|---|---|---|---|

| Build a CRUD API | Flawless. Implements RESTful patterns, error handling, and data validation impeccably. | Functional, but may need prompting for best practices like input sanitization. | Accurate and literal. Does exactly what’s asked, but may not add proactive safeguards. |

| Create a Data Dashboard | Strong. Builds logical backend endpoints and a serviceable, clean frontend. | Excellent. Generates the most visually impressive and interactive UI components. | Weaker. Focuses on data logic; frontend output is very basic. |

| Debug a Complex Bug | Superior. Reasons through the problem, proposes and tests multiple hypotheses. | Good. Identifies issues but may provide fixes that introduce new edge cases. | Moderate. Good at tracing errors in existing code but less creative in solutions. |

Winner for SaaS: Claude Opus 4.5. Its end-to-end understanding and production-minded code quality make it the safest, most efficient choice for building robust applications.

Head-to-Head: 2D Game Development Arena

| Task | Claude Opus 4.5 | Gemini 3 Pro | DeepSeek R1 |

|---|---|---|---|

| Implement Game Physics | Superior. Writes precise, efficient collision detection and movement logic. | Creative but sometimes over-engineered. Can prioritize “cool effects” over performance. | Good. Follows instructions well; a solid, predictable performer. |

| Design Game AI (e.g., Ghosts) | Outstanding. Creates logical, tunable state machines for enemy behavior. | Attempts complex behaviors but can lead to unpredictable or buggy AI. | Basic. Implements straightforward follow/pathfinding logic reliably. |

| Generate Asset Management Code | Very Good. Builds scalable systems for loading and managing sprites/sounds. | Good. May integrate suggestions for visual effects and transitions. | Focused on function. Code works but lacks optimization flourishes. |

Winner for 2D Games: Claude Opus 4.5. Game logic demands precision and reliability. Opus’s strong logical reasoning produces stable, performant, and debuggable code, which is far more valuable than flashy but unstable features.

Final Rankings and Recommendations

-

🏆 Overall Winner: Claude Opus 4.5. It is the most intelligent, reliable, and versatile model for serious vibe coding across both SaaS and game development. It’s worth the premium for projects where quality and scalability matter.

-

🥈 Best Value: Claude Sonnet 4.5. For most developers and most projects, Sonnet provides phenomenal capability at a much lower cost. Start here.

-

🥉 Best for UI/Frontend: Gemini 3 Pro. If your primary goal is stunning, interactive prototype frontends, Gemini 3 Pro is a magical tool.

-

Niche Specialists:

-

Grok 4.2: Choose it for working within and extending large, existing codebases.

-

DeepSeek R1: An excellent choice for game development, especially if you value open-source models or have specific hardware.

-

Gemini Flash: Use it for generating quick code snippets and boilerplate.

-

Pro Tips for Vibe Coding Success

-

Iterate, Don’t Dictate: Treat the AI as a junior partner. Start with a high-level vibe, then refine through successive prompts (e.g., “Now make the login form modal pop up smoothly”).

-

Provide Context: When working on existing projects, paste relevant code snippets or architecture outlines to ground the model.

-

Ask for Explanations: Prompting “Explain the approach you’re taking” can uncover misunderstandings before code is written.

-

Embrace Hybrid Workflows: Use Opus for core architecture, Gemini for UI polish, and Grok for refactoring. No single model has to do everything.

The Future of Vibe Coding

The technology is evolving at a breathtaking pace. The gap between “good enough” and “exceptional” is already defined by models like Claude Opus 4.5. As models become more agentic—capable of planning and executing multi-step tasks—the role of the developer will shift further towards being a director of AI talent.

For now, choosing the right model is your most critical leverage point. Based on my hands-on experience building and shipping real applications, Claude Opus 4.5 stands as the most powerful engine for turning your vision into reality. Give it a clear prompt, and be prepared to be impressed.

Leave a Comment

Your email address will not be published. Required fields are marked *

No Comments Yet

Be the first to share your thoughts!